MUG'17

Final Program

Conference Location: Ohio Supercomputer Center Bale Theater

MUG'17 meeting attendees gather for a group photo.

Monday, August 14

8:15 - 9:00

Registration and Continental Breakfast

Abstract

Developing high-performing and portable communication libraries that cater to different programming paradigms and different architectures is challenging task. For performance, these libraries have to leverage the rich network capabilities provided by the network interconnects and CPU architecture, often requiring a deep understanding of wide-range of network capabilities, the interplay of software and CPU architecture, and its impact on the library implementation and application communication characteristics. In this tutorial, we will provide insights into development high-performance network communication libraries on ARMv8 architecture. The tutorial will introduce ARMv8 architecture and will cover a broad variety of topics, including ARM software ecosystem, relaxed memory model, Neon vector instructions, fine grain memory tuning, and others.

Bio

Pavel is a Principal Research Engineer at ARM with over 16 years of experience in development HPC solutions. His work is focused on co-design software and hardware building blocks for high-performance interconnect technologies, development communication middleware and novel programming models. Prior to joining ARM, he spent five years at Oak Ridge National Laboratory (ORNL) as a research scientist at Computer Science and Math Division (CSMD). In this role, Pavel was responsible for research and development multiple projects in high-performance communication domain including: Collective Communication Offload (CORE-Direct & Cheetah), OpenSHMEM, and OpenUCX. Before joining ORNL, Pavel spent ten years at Mellanox Technologies, where he led Mellanox HPC team and was responsible for development HPC software stack, including OFA software stack, OpenMPI, MVAPICH, OpenSHMEM, and other. Pavel is a recipient of prestigious R&D100 award for his contribution in development of the CORE-Direct collective offload technology. In addition, Pavel has contributed to multiple open specifications (OpenSHMEM, MPI, UCX) and numerous open source projects (MVAPICH, OpenMPI, OpenSHMEM-UH, etc).

10:30 - 11:00

Break

Abstract

High performance computing has begun scaling beyond Petaflop performance towards the Exaflop mark. One of the major concerns throughout the development toward such performance capability is scalability - at the component level, system level, middleware and the application level. A Co-Design approach between the development of the software libraries and the underlying hardware can help to overcome those scalability issues and to enable a more efficient design approach towards the Exascale goal. In the tutorial session we will review latest development areas within the Co-Design architecture: 1. HPC-X™ Software Toolkit 2. SHArP Technology 3. Hardware tag matching (UCX) 4. Out-of-order RDMA /adaptive routing (UCX) 5. Applications acceleration

Bio

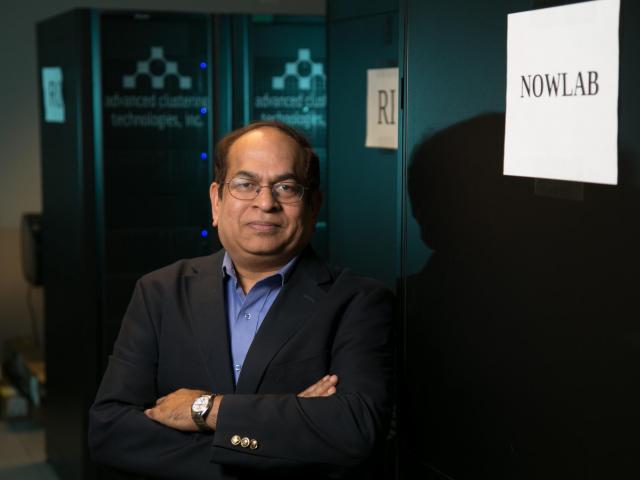

Devendar Bureddy is a Staff Engineer at Mellanox Technologies. At Mellanox, Devendar was instrumental in building several key technologies like SHArP, HCOLL ..etc. Previously, he was a software developer at The Ohio State University in network-Based Computing Laboratory lead by Dr. D. K. Panda. At Nowlab, Devendar involved in the design and development of MVAPICH2, an open-source high-performance implementation of MPI over InfiniBand and 10GigE/iWARP. He had received his Master's degree in Computer Science and Engineering from the Indian Institute of Technology, Kanpur. His research interests include high speed interconnects, parallel programming models and HPC software.

12:30 - 1:30

Lunch

Abstract

First generation Intel Omni-Path fabric delivers 100 Gb/s of bandwidth per port and very low latency. In this tutorial, we delve into details of what's new with Intel Omni-Path including support for heterogeneous clusters and performance on Intel(r) Xeon(r) scalable family platinum CPUs.

Bio

Ravindra Babu Ganapathi (Ravi) is the Technical Lead/Engineering Manager responsible for technical leadership, vision and execution of Intel OmniPath Libraries. In the past, Ravi was the lead developer and architect for Intel Xeon Phi offload compiler runtime libraries (MYO, COI) and also made key contributions across Intel Xeon PHI software stack including first generation Linux driver development. Before Intel, Ravi was lead developer implementing high performance libraries for image and signal processing tuned for x86 architecture. Ravi received his MS in Computer Science from Columbia University, NY.

Sayantan Sur is a Software Engineer at Intel Corp, in Hillsboro, Oregon. His work involves High Performance computing, specializing in scalable interconnection fabrics and Message passing software (MPI). Before joining Intel Corp, Dr. Sur was a Research Scientist at the Department of Computer Science and Engineering at The Ohio State University. In the past, he has held a post-doctoral position at IBM T. J. Watson Research Center, NY. He has published more than 20 papers in major conferences and journals related to these research areas. Dr. Sur received his Ph.D. degree from The Ohio State University in 2007.

3:00 - 3:30

Break

Abstract

As GPU accelerated nodes change the balance of system architectures, communication techniques need to evolve to maximize application scalability. In this tutorial, we will discuss a variety of topics that are key to maximizing future application performance. Topics will include use of NVLink in MPI applications, asynchronous network transfers with GPUDirect, integrating Shmem into CUDA (NVShmem), SaturnV, our internal research cluster for deep learning and HPC, and best practices for maximizing GPU system performance.

Bio

Craig Tierney is a Solution Architect at NVIDIA supporting high performance computing and deep learning. He is focused GPU architectures for high performance computing and deep learning systems as well as finding ways to apply deep learning in traditional HPC domains. Prior to joining NVIDIA, Craig spent more than a decade providing high performance computing architecture and computational science support to NOAA and several other government and educational organizations including DOE, DOD, NASA and Stanford University. Craig holds a Ph.D. in Aerospace Engineering Sciences from the University of Colorado at Boulder.

6:00 - 9:30

Reception Dinner at King Avenue 5

945 King Ave

Columbus, OH 43210

Tuesday, August 15

7:45 - 8:15

Registration and Continental Breakfast

8:15 - 8:30

Opening Remarks

Dave Hudak, Executive Director, Ohio Supercomputer Center

Dhabaleswar K (DK) Panda, The Ohio State University

Abstract

While much of the emphasis in machine learning, high end computing, and cloud computing is about the “processor wars” and the choice of GPU or CPU for particular algorithms, high performance interconnects remain an absolutely essential ingredient of achieving performance at large scale. This talk will focus on the role MVAPICH has played at the Texas Advanced Computing Center (TACC) over multiple generations of multiple technologies of interconnect, and why it has been critical not only in maximizing interconnect performance, but overall system performance as well. The talk will include examples of how poorly tuned interconnects can mean the difference between success and failure for large systems, and how the MVAPICH software level continues to provide significant performance advantages across a range of applications and interconnects.

Bio

Dr. Stanzione is the Executive Director of the Texas Advanced Computing Center (TACC) at The University of Texas at Austin since July 2014, previously serving as Deputy Director. He is the principal investigator (PI) for a National Science Foundation (NSF) grant to deploy and support Stampede2, a large scale supercomputer, which will have over twice the system performance of TACC’s original Stampede system. Stanzione is also the PI of TACC's Wrangler system, a supercomputer for data-focused applications. For six years he was co-director of CyVerse, a large-scale NSF life sciences cyberinfrastructure. Stanzione was also a co-principal investigator for TACC's Ranger and Lonestar supercomputers, large-scale NSF systems previously deployed at UT Austin. Stanzione received his bachelor's degree in electrical engineering and his master's degree and doctorate in computer engineering from Clemson University.

Abstract

This talk will provide an overview of the MVAPICH project (past, present and future). Future roadmap and features for upcoming releases of the MVAPICH2 software family (including MVAPICH2-X, MVAPICH2-GDR, MVAPICH2-Virt, MVAPICH2-EA and MVAPICH2-MIC) will be presented. Current status and future plans for OSU INAM, OEMT and OMB will also be presented.

Bio

DK Panda is a Professor and University Distinguished Scholar of Computer Science and Engineering at the Ohio State University. He has published over 400 papers in the area of high-end computing and networking. The MVAPICH2 (High Performance MPI and PGAS over InfiniBand, iWARP and RoCE) libraries, designed and developed by his research group (http://mvapich.cse.ohio-state.edu), are currently being used by more than 2,800 organizations worldwide (in 85 countries). More than 424,000 downloads of this software have taken place from the project's site. This software is empowering several InfiniBand clusters (including the 1st, 15th, 20th and 44th ranked ones) in the TOP500 list. The RDMA packages for Apache Spark, Apache Hadoop and Memcached together with OSU HiBD benchmarks from his group (http://hibd.cse.ohio-state.edu) are also publicly available. These libraries are currently being used by more than 240 organizations in 31 countries. More than 22,900 downloads of these libraries have taken place. He is an IEEE Fellow. The group has also been focusing on co-designing Deep Learning Frameworks and MPI Libraries. A high-performance and scalable version of the Caffe framework is available from High-Performance Deep Learning (HiDL) Project site. More details about Prof. Panda are available at http://www.cse.ohio-state.edu/~panda.

10:00 - 10:30

Break

Abstract

High-performance computing is being applied to solve the world's most daunting problems, including researching climate change, studying fusion physics, and curing cancer. MPI is a key component in this work, and as such, the MVAPICH team plays a critical role in these efforts. In this talk, I will discuss recent science that MVAPICH has enabled and describe future research that is planned. I will detail how the MVAPICH team has responded to address past problems and list the requirements that future work will demand.

Bio

Adam is a member of the Development Environment Group within Livermore Computing. His background is in MPI development, collective algorithms, networking, and parallel I/O. He is responsible for supporting MPI on Livermore's Linux clusters. He is a project lead for the Scalable Checkpoint / Restart library and mpiFileUtils -- two projects that use MPI to help users manage large data sets. He leads the CORAL burst buffer working group for Livermore. In recent work, he has been investigating how to employ MPI and fast storage in deep learning frameworks like LBANN.

Abstract

Robust MPI implementations offer powerful mechanisms for improving the performance of scalable parallel applications running on different high-performance computing (HPC)platforms. Indeed, it is the inherent complexity of the interactioms between applications and platforms that result in performance engineering challenges, some of which are closely involved with how the MPI software is configured and tuned. Application-specific and platform-specific factors are consequential to the performance outcomes and must be understood in order to take advantage of the performance-enhancing feautures a particular MPI system has to offer. The MPI Tools Interface (MPI_T), introduced as part of the MPI 3.0 standard, provides an opportunity for performance tools and external software to introspect and understand MPI runtime behaviour at a deeper level to detect performance issues. The interface also provides a mechanism to re-configure the MPI library dynamically at run time to fine-tune performance. In this talk, I will present the work being done to integrate MVAPICH2 and the TAU Performance System for for creating a performance engineering framework for MPI applications. This framework builds MPI_T interface to offer runtime instrospection, online monitoring, recommendation generation, and autotuning capabilities. Examples will be given that demonstrate the need for such a framework and the advantages it can bring for optimization.

Bio

Allen D. Malony is a Professor in the Department of Computer and Information Science at the University of Oregon (UO) where he directs parallel computing research projects, notably the TAU parallel performance system project. He has extensive experience in performance benchmarking and characterization of high-performance computing systems, and has developed performance evaluation tools for a range of parallel machines during the last 30 years. Malony is also interested in computational and data science. He is the Director of the brand new Oregon Advanced Computing Institute for Science and Society (OACISS) at the University of Oregon. Malony was awarded the NSF National Young Investigator award, was a Fulbright Research Scholar to The Netherlands and Austria, and received the Alexander von Humboldt Research Award for Senior U.S. Scientists by the Alexander von Humboldt Foundation. Recently, he was the Fulbright-Tocqueville Distinguished Chair to France Malony is the CEO of ParaTools, Inc., which he founded with Dr. Sameer Shende in 2004. ParaTools SAS is a French company they started in 2014, and is wholly-owned company by ParaTools, Inc. ParaTools specializes in performance analysis and engineering, HPC applications and optimization, and parallel software, hardware, and tools.

Abstract

Performance and scalability are central questions concerning the MVAPICH2 and the HPC community more broadly. The Open Fabrics Interface (OFI) is an effort led by the OpenFabrics to minimize the impedance mismatch between applications and fabric hardware with the end goal of having higher performance and scalability. Over the past couple of years, open-source HPC software such as MPI, SHMEM, GASNet, Charm++, and other libraries have been developed over OFI in a co-design fashion. This talk will outline some of the designs that have emerged as a part of that co-design effort and the corresponding performance improvements that have resulted from the reduced impedance match.

Bio

Sayantan Sur is a Software Engineer at Intel Corp, in Hillsboro, Oregon. His work involves High Performance computing, specializing in scalable interconnection fabrics and Message passing software (MPI). Before joining Intel Corp, Dr. Sur was a Research Scientist at the Department of Computer Science and Engineering at The Ohio State University. In the past, he has held a post-doctoral position at IBM T. J. Watson Research Center, NY. He has published more than 20 papers in major conferences and journals related to these research areas. Dr. Sur received his Ph.D. degree from The Ohio State University in 2007.

12:00 - 12:10

Group Photo

12:10 - 1:00

Lunch

Abstract

The latest revolution in HPC is the move to a co-design architecture, a collaborative effort among industry, academia, and manufacturers to reach Exascale performance by taking a holistic system-level approach to fundamental performance improvements. Co-design architecture exploits system efficiency and optimizes performance by creating synergies between the hardware and the software.

Co-design recognizes that the CPU has reached the limits of its scalability, and offers an intelligent network as the new “co-processor” to share the responsibility for handling and accelerating application workloads. By placing data-related algorithms on an intelligent network, we can dramatically improve the data center and applications performance.

Bio

Gilad Shainer is the vice president of marketing at Mellanox Technologies since March 2013. Previously, Mr. Shainer was Mellanox's vice president of marketing development from March 2012 to March 2013. Mr. Shainer joined Mellanox in 2001 as a design engineer and later served in senior marketing management roles between July 2005 and February 2012. Mr. Shainer holds several patents in the field of high-speed networking and contributed to the PCI-SIG PCI-X and PCIe specifications. Gilad Shainer holds a MSc degree (2001, Cum Laude) and a BSc degree (1998, Cum Laude) in Electrical Engineering from the Technion Institute of Technology in Israel.

Abstract

As high performance computing moves toward GPU-accelerated architectures, single node application performance can be between 3x and 75x faster than the CPUs alone. Performance increases of this size will require increases in network bandwidth and message rate to prevent the network from becoming the bottleneck in scalability. In this talk, we will present results from NVLink enabled systems connected via quad-rail EDR Infiniband.

Bio

Craig Tierney is a Solution Architect at NVIDIA supporting high performance computing and deep learning. He is focused GPU architectures for high performance computing and deep learning systems as well as finding ways to apply deep learning in traditional HPC domains. Prior to joining NVIDIA, Craig spent more than a decade providing high performance computing architecture and computational science support to NOAA and several other government and educational organizations including DOE, DOD, NASA and Stanford University. Craig holds a Ph.D. in Aerospace Engineering Sciences from the University of Colorado at Boulder.

Abstract

Deep Learning (DL) is ubiquitous. Yet leveraging distributed memory systems for DL algorithms is incredibly hard. In this talk, we will present approaches to bridge this critical gap. We will start by scaling DL algorithms on large scale systems such as leadership class facilities (LCFs). Specifically, we will: 1) present our TensorFlow and Keras runtime extensions which require negligible changes in user-code for scaling DL implementations, 2) present communication-reducing/avoiding techniques for scaling DL implementations, 3) present approaches on fault tolerant DL implementations, and 4) present research on semi-automatic pruning of DNN topologies. Our results will include validation on several US supercomputer sites such as Berkeley's NERSC, Oak Ridge Leadership Class Facility, and PNNL Institutional Computing. We will provide pointers and discussion on the general availability of our research under the umbrella of Machine Learning Toolkit on Extreme Scale (MaTEx) available at http://github.com/matex-org/matex.

Bio

Abhinav Vishnu is a chief scientist and team lead for scalable machine learning at Pacific Northwest National Laboratory. He focuses on designing extreme scale Deep Learning algorithms which are capable of execution on supercomputers and cloud computing systems. The specific objectives are to design user-transparent distributed TensorFlow; novel communication reducing/approximation techniques for DL algorithms; fault tolerant Deep Learning/Machine Learning algorithms; multi-dimensional deep neural networks and applications of these techniques on several domains such as high energy physics, computational chemistry and general computer vision tasks. His research is publicly available as Machine Learning Toolkit for Extreme Scale (MaTEx) at http://github.org/matex-org/matex.

Abstract

The aim of this talk is to present the current on-going work on "Theoretical ROVibrational Energies" (TROVE), a code used for the calculation of rovibrational energies for polyatomic molecules developed by UCL (UK). TROVE is a flagship code for DiRAC, an UK integrated supercomputing facility for theoretical modelling and HPC-based research in particle physics, nuclear physics, astronomy and cosmology. The DiRAC facility provides a variety of computer architectures, matching machine architecture to the algorithm design and requirements of the research problems to be solved. TROVE like many other scientific codes in the DiRAC community has evolved around a shared-memory HPC architecture which will soon disappear and replaced by more conventional distributed memory HPC clusters. Our work is to enable TROVE to enter the distributed era. In order to ease portability and programmability we decided to first approaching the problem using PGAS. By focusing mainly on a specific part of the TROVE complex pipeline, we have compared three implementations of Coarray Fortran (Intel, opencoarrays and openshmem + mvapich2). We report on both synthetic test cases and real application. We compare the performance, portability and usability for the three implementations on both Mellanox EDR and Intel OmniPath interconnects, allowing us to draw conclusions on the effectiveness of this PGAS model for porting complex scientific code to distributed memory architectures.

Bio

Jeffrey Salmond has a background in Theoretical Physics and Scientific Computing. His postgraduate work in the Laboratory for Scientific Computing at the University of Cambridge primarily involved writing high-performance code for computational fluid dynamics problems. In his current role as a Research Software Engineer and High-Performance Computing Consultant, Jeffrey optimises the performance of complex scientific codes across a range of projects. These scientific codes are utilised for research within the departments of Physics, Chemistry, and Engineering.

3:00 - 3:45

Break and Student Poster Session

Co-designing MPI Runtimes and Deep Learning Frameworks for Scalable Distributed Training on GPU Clusters - Ammar Awan, The Ohio State University

Bringing the DMRG++ Application to Exascale - Arghya Chatterjee, Georgia Institute of Technology

High Performance and Scalable Broadcast Schemes for Deep Learning in GPU Clusters - Ching-Hsiang Chu, The Ohio State University

Characterization of Data Movement Requirements For Sparse Matrix Computations on GPUs - Emre Kurt, The Ohio State University

Accelerating Big Data Processing in the Cloud with Scalable Communication and I/O Schemes - Shashank Gugnani, The Ohio State University

Supporting Hubrid MPI + PGAS Programming Models through Unified Communication Runtime: An MVAPICH2-X Approach - Jahanzeb Hashmi, The Ohio State University

Integrate TAU, MVAPICH, and BEACON to Enable MPI Performance Monitoring - Aurele Maheo, University of Oregon

Multidimensional Encoding of Structural Brain Connections; Build Biological Networks with Preserved Edge Properties, Brent McPherson, Indiana University

Integrating MVAPICH and TAU through the MPI Tools Interface - A Plugin Architecture to Enable Autotuning and Recommendation Generation - Srinivasan Ramesh, University of Oregon

Energy Efficient Spinlock Synchronization - Karthik Vadambacheri, University of Cincinnati

Enabling Asynchronous Coupled Data Intensive Analysis Workflows on GPU-Accelerated Platforms via Data Staging - Daihou Want, Rutgers University

Designing and Building Efficient HPC Cloud with Modern Networking Technologies on Heterogeneous HPC Clusters - Jie Zhang, The Ohio State University

Abstract

The irregular patterns, which can be characterized by irregular memory accesses, control flows, and communications, are increasingly emerging in scientific and big data applications. When mapping such applications on HPC clusters, traditional implementations based on two-sided communication will severely affect application performance. On the other hand, the one-sided communication that allows the programmer to issue multiple operations asynchronously and complete them later is a promising method to reduce the communication latency, benefit the communication and computation overlapping, and provide the fine-grained parallelism. This talk will introduce our early experiences of using MPI one-sided communication to accelerate several important bioinformatics applications. The evaluation illustrates MPI one-sided communication can significantly improve application performance in most experimental setups. The insights of application and MPI library co-design for better performance will also be presented.

Bio

Dr. Hao Wang is a senior research associate in the Department of Computer Science at Virginia Tech. He received his Ph.D. in the Institute of Computing Technology at Chinese Academy of Sciences and completed his postdoctoral training at the Ohio State University. His research interests include high performance computing, parallel computing architecture, and big data analytics. He has published 40+ papers with more than 900 citations. He is also program committee member for 15 conferences/workshops, and reviewer of over 10 journals of computer science.

Abstract

Quantum simulations of large-scale semiconductor structures is important as they not only predict properties of physically realizable novel materials, but can accelerate advanced device designs. In this work, we explore the performance of MVAPICH 2.2 for electronic structure simulations of multi-million atomic systems on Intel Knights Landing (KNL) Processors. After presenting a brief introduction of core numerical operations and parallelization scheme, we demonstrate the scalability of simulations measured with MVAPICH 2.2, and discuss the result against the one measured with MPICH-based Intel Parallel Studio 2017. Details of the analysis this work present may serve as one of practical suggestions that can be helpful to strengthen the utility of MVAPICH in high performance computing systems of a manycore base and the communities of computational nanoelectronics.

Bio

H. Ryu received BSEE in Seoul National Univ. (South Korea), MSEE and PhD in Stanford and Purdue Univ. (USA), respectively. He was with System LSI division, Samsung Electronics Corp., and is now with Korea Institute of Science and Technology information (KISTI). He was one of the core developers of 3D NanoElectronics MOdeling tool (NEMO-3D) in Purdue University, and now leads the Intel Parallel Computing Center (IPCC) in KISTI. His research interest covers modelling of advanced nanoscale devices, and high performance computing with a focus on performance enhancement of large-scale PDE problems.

4:45 - 5:15

Open MIC Session

6:00 - 9:30

Banquet Dinner at Bravo Restaurant

1803 Olentangy River RD

Columbus, OH 43212

Wednesday, August 16

7:45 - 8:30

Registration and Continental Breakfast

Abstract

This talk will reflect on prior analysis of the challenges facing high-performance interconnect technologies intended to support extreme-scale scientific computing systems, how some of these challenges have been addressed, and what new challenges lay ahead. Many of these challenges can be attributed to the complexity created by hardware diversity, which has a direct impact on interconnect technology, but new challenges are also arising indirectly as reactions to other aspects of high-performance computing, such as alternative parallel programming models and more complex system usage models. We will describe some near-term research on proposed extensions to MPI to better support massive multithreading and implementation optimizations aimed at reducing the overhead of MPI tag matching. We will also describe a new portable programming model to offload simple packet processing functions to a network interface that is based on the current Portals data movement layer. We believe this capability will offer significant performance improvements to applications and services relevant to high-performance computing as well as data analytics.

Bio

Ron Brightwell currently leads the Scalable System Software Department at Sandia National Laboratories. After joining Sandia in 1995, he was a key contributor to the high-performance interconnect software and lightweight operating system for the world’s first terascale system, the Intel ASCI Red machine. He was also part of the team responsible for the high-performance interconnect and lightweight operating system for the Cray Red Storm machine, which was the prototype for Cray’s successful XT product line. The impact of his interconnect research is visible in network technologies available today from Bull, Intel, and Mellanox. He has also contributed to the development of the MPI-2 and MPI-3 specifications. He has authored more than 100 peer-reviewed journal, conference, and workshop publications. He is an Associate Editor for the IEEE Transactions on Parallel and Distributed Systems, has served on the technical program and organizing committees for numerous high-performance and parallel computing conferences, and is a Senior Member of the IEEE and the ACM.

Abstract

There is a growing sense within the HPC community for the need to have an open community effort to more efficiently build, test, and deliver integrated HPC software components and tools. To address this need, OpenHPC launched as a Linux Foundation collaborative project in 2016 with combined participation from academia, national labs, and industry. The project's mission is to provide a reference collection of open-source HPC software components and best practices in order to lower barriers to deployment and advance the use of modern HPC methods and tools. This presentation will present an overview of the project, highlight packaging conventions and currently available software, outline integration testing/validation efforts with bare-metal installation recipes, and discuss recent updates and expected future plans.

Bio

Karl W. Schulz received his Ph.D. in Aerospace Engineering from the University of Texas in 1999. After completing a one- year post-doc, he transitioned to the commercial software industry working for the CD-Adapco group as a Senior Project Engineer to develop and support engineering software in the field of computational fluid dynamics (CFD). After several years in industry, Karl returned to the University of Texas in 2003, joining the research staff at the Texas Advanced Computing Center (TACC), a leading research center for advanced computational science, engineering and technology. During his 10-year term at TACC, Karl was actively engaged in HPC research, scientific curriculum development and teaching, technology evaluation and integration, and strategic initiatives serving on the Center's leadership team as an Associate Director and leading TACC's HPC group and Scientific Applications group during his tenure. He was a Co-principal investigator on multiple Top-25 system deployments serving as application scientist and principal architect for the cluster management software and HPC environment. Karl also served as the Chief Software Architect for the PECOS Center within the Institute for Computational Engineering and Sciences, a research group focusing on the development of next-generation software to support multi-physics simulations and uncertainty quantification. Karl joined the Technical Computing Group at Intel in January 2014 and is presently a Principal Engineer engaged in the architecture, development, and validation of HPC system software.

Abstract

As the world of high performance computing evolves, new models of checkpointing are required. DMTCP (Distributed MultiThreaded CheckPointing) continues to evolve to support such models. The newest contribution to HPC is a SLURM module, currently in beta testing, that integrates DMTCP to support automatic checkpointing in SLURM. Naturally, MVAPICH is among the major MPI implementations supported. The DMTCP team has also demonstrated support for OpenSHMEM. DMTCP has also been extended to support Linux pty's, which are often used to support interactive subsystems. Internally, the DMTCP team is now experimenting with support for the emerging VeloC API to support memory cutouts -- intended to support features similar to SC/R and FTI. Finally, there are internal research efforts, ranging from the cautiously optimistic to the highly speculative, that will support transparent checkpointing for the Intel Omni-Path network fabric, for statically linked target executables, and for executables using NVIDIA GPUs.

Bio

Professor Cooperman works in high-performance computing and scalable applications for computational algebra. He received his B.S. from U. of Michigan in 1974, and his Ph.D. from Brown University in 1978. He then spent six years in basic research at GTE Laboratories. He came to Northeastern University in 1986, and has been a full professor since 1992. In 2014, he was awarded a five-year IDEX Chair of Attractivity at from the Université Fédérale Toulouse Midi-Pyrénées, France. Since 2004, he has led the DMTCP project (Distributed MultiThreaded CheckPointing). Prof. Cooperman also has a 15-year relationship with CERN, where his work on semi-automatic thread parallelization of task-oriented software is included in the million-line Geant4 high-energy physics simulator. His current research interests emphasize studying the limits of transparent checkpoint-restart. Some current domains of interest are: supercomputing, cloud computing, engineering desktops (license servers, etc.), GPU-accelerated graphics, GPGPU computing, and the Internet of Things.

10:30 - 11:00

Break

Abstract

The talk will focus on the importance and significance of multidisciplinary/interdisciplinary research and education.

Bio

Sushil K. Prasad is a Program Director at National Science Foundation in its Office of Advanced Cyberinfrastructure (OAC) in Computer and Information Science and Engineering (CISE) directorate. He is an ACM Distinguished Scientist and a Professor of Computer Science at Georgia State University. He is the director of Distributed and Mobile Systems Lab carrying out research in Parallel, Distributed, and Data Intensive Computing and Systems. He has been twice-elected chair of IEEE-CS Technical Committee on Parallel Processing (TCPP), and leads the NSF-supported TCPP Curriculum Initiative on Parallel and Distributed Computing for undergraduate education.

Abstract

Applications, programming languages, and libraries that leverage sophisticated network hardware capabilities have a natural advantage when used in today¹s and tomorrow¹s high-performance and data center computer environments. Modern RDMA based network interconnects provides incredibly rich functionality (RDMA, Atomics, OS-bypass, etc.) that enable low-latency and high-bandwidth communication services. The functionality is supported by a variety of interconnect technologies such as InfiniBand, RoCE, iWARP, Intel OPA, Cray¹s Aries/Gemini, and others. Over the last decade, the HPC community has developed variety user/kernel level protocols and libraries that enable a variety of high-performance applications over RDMA interconnects including MPI, SHMEM, UPC, etc. With the emerging availability HPC solutions based on ARM CPU architecture, it is important to understand how ARM integrates with the RDMA hardware and HPC network software stack. In this talk, we will overview ARM architecture and system software stack. We will discuss how ARM CPU interacts with network devices and accelerators. In addition, we will share our experience in enabling RDMA software stack and one-sided communication libraries (Open UCX, OpenSHMEM/SHMEM) on ARM and share preliminary evaluation results.

Bio

Pavel is a Principal Research Engineer at ARM with over 16 years of experience in development HPC solutions. His work is focused on co-design software and hardware building blocks for high-performance interconnect technologies, development communication middleware and novel programming models. Prior to joining ARM, he spent five years at Oak Ridge National Laboratory (ORNL) as a research scientist at Computer Science and Math Division (CSMD). In this role, Pavel was responsible for research and development multiple projects in high-performance communication domain including: Collective Communication Offload (CORE-Direct & Cheetah), OpenSHMEM, and OpenUCX. Before joining ORNL, Pavel spent ten years at Mellanox Technologies, where he led Mellanox HPC team and was responsible for development HPC software stack, including OFA software stack, OpenMPI, MVAPICH, OpenSHMEM, and other. Pavel is a recipient of prestigious R&D100 award for his contribution in development of the CORE-Direct collective offload technology. In addition, Pavel has contributed to multiple open specifications (OpenSHMEM, MPI, UCX) and numerous open source projects (MVAPICH, OpenMPI, OpenSHMEM-UH, etc).

Abstract

SDSC's Comet cluster features 1944 nodes with Intel Haswell processors and two types of GPU nodes:1) 36 nodes with Intel Haswell CPUs (2-socket, 24 cores) with 4 NVIDIA K-80 GPUs (two accelerator cards) each, and 2) 36 nodes with Intel Broadwell CPUs (2-socket, 28 cores) with 4 NVIDIA P100 GPUs on each. The cluster has MVAPICH2, MVAPICH2-x, and MVAPICH2-GDR installations available for users. We will present microbenchmark and application performance results using MVAPICH2-GDR on the GPU nodes. Applications will include HOOMD-Blue, and OSU-Caffe.

Bio

Mahidhar Tatineni received his M.S. & Ph.D. in Aerospace Engineering from UCLA. He currently leads the User Services group at SDSC. He has led the deployment and support of high performance computing and data applications software on several NSF and UC resources including Comet, and Gordon at SDSC. He has worked on many NSF funded optimization and parallelization research projects such as petascale computing for magnetosphere simulations, MPI performance tuning frameworks, hybrid programming models, topology aware communication and scheduling, big data middleware, and application performance evaluation using next generation communication mechanisms for emerging HPC systems.

Abstract

During 2017 the University of Cambridge Research Computing Service, in partnership with EPSRC and STFC, announced a co-investment for the creation of the "Cambridge Service for Data Driven Discovery" (CSD3). CSD3 is a multi-institution service underpinned by an innovative, petascale, data-centric HPC platform, designed specifically to drive data-intensive simulation and high-performance data analysis. As part of this new facility, a brand new GPU cluster called "Wilkes-2" based on DELL C4130 servers, NVIDIA TESLA P100 and Mellanox Infiniband EDR has been deployed. Wilkes-2, ranked #100 in the June 2017 Top500 and #5 in the June 2017 Green500 is the first GPU-accelerated petascale system in the UK with 1.19 PFlop/s measured performance. It replaced "Wilkes-1" which was #2 in the November 2013 Green500 and it has been a pioneer system to experiment at scale with GPU Direct over RDMA. In this talk, we will present early performance numbers of few applications running using MVAPICH2 at scale, from CFD to material science. The exploitation of GPU Direct over RDMA from every GPU in the system is made possible thanks to MVAPICH2-GDR which will be fully supported and deployed to all users and for a various range of compilers.

Bio

Filippo Spiga is Head of Research Software Engineering at the University of Cambridge's Research Computing Services. He leads a team of people working on research software in various fields, from finite elements methods to quantum chemistry, pursuing the objective "Better Software for Better Research". He is interested in GPU accelerated computing and fascinated by the increasing heterogeneity of future compute platforms that will disrupt and reshape the future of scientific computing. He is involved in the new UK Tier-2 EPSRC "Cambridge Service for Data Driven Discovery" (CSD3) and he is executive member of the Cambridge Big Data initiative.

12:30 - 1:00

Lunch

Abstract

As the number of cores integrated in a modern processor package increases, the scalability of MPI intra-node communication becomes more important. LiMIC was suggested in 2005 opening a new era of kernel-level support for MPI intra-node communication on multi-core systems. The modified version, called LiMIC2, has been released with MVAPICH/MVAPICH2 and has inspired several similar approaches, such as CMA. In this talk, I will present an idea to improve the performance of LiMIC2 for contemporary many-core processors and share preliminary results on Intel Xeon Phi Knights Landing processor.

Bio

Hyun-Wook Jin is a Professor in the Department of Computer Science and Engineering at Konkuk University, Seoul, Korea. He is leading the System Software Research Laboratory (SSLab) at Konkuk University. Before joining Konkuk University in 2006, He was a Research Associate in the Department of Computer Science and Engineering at The Ohio State University. He received Ph.D. degree from Korea University in 2003. His main research focus is on the design of operating systems for high-end computing systems and cyber-physical systems. His research group is contributing to several open source projects, such as MVAPICH, RTEMS, and Linux.

Abstract

The FFMK project designs, builds, and evaluates a software system architecture to address the challenges expected in an Exascale system. In particular, these include performance losses caused by the much larger impact of runtime variability within applications, the hardware, and the operating system. Our node operating system is based on a small L4 microkernel, which supports a combination of specialized runtimes (e.g., MPI) and a full-blown general purpose operating system (Linux) for compatibility. We carefully split operating system and runtime components such that performance-critical functionality running on L4 remains undisturbed by management-related background activities in Linux and HPC services.

The talk will cover our experiences and lessons learned with porting MPI libraries, and MVAPICH2 in particular, to such an operating system. Different approaches to adapting MPI and the communication drivers will be discussed, including a specialized communication back-end to target the L4 microkernel directly, and two iterations of a hybrid system architecture, where MPI and the InfiniBand driver stack are split to run on both L4 and a virtualized Linux kernel. Maintenance effort, compatibility, and latency benefits of running MPI programs on a fast and noise-free microkernel are discussed.

Bio

Dr. Carsten Weinhold received a Master's degree (Diplom) from TU Dresden, Germany in 2006. He conducted research in the area of microkernel-based operating systems and security architectures in several projects funded by the European Union and the German Research Foundation. He defended his doctoral thesis on the subject of reducing size and complexity of the security-critical codebase of file systems in 2014. The underlying principles of splitting operating system services into critical components (e.g., for security) and uncritical ones (for everything else) are now applied in the area of high- performance computing (HPC). The goal of this effort, conducted in the FFMK project, is to use these principles to improve performance and latency in the critical paths of the runtime and system software for large-scale HPC systems.

Abstract

Significant growth has been witnessed during the last few years in HPC clusters with multi-/many-core processors, accelerators, and high-performance interconnects (such as InfiniBand, Omni-Path, iWARP, and RoCE). To alleviate the cost burden, sharing HPC cluster resources to end users through virtualization is becoming more and more attractive. The recently introduced Single-Root I/O Virtualization (SR-IOV) technique for InfiniBand and High-Speed Ethernet on HPC clusters provides native I/O virtualization capabilities and opens up many opportunities to design efficient HPC clouds. However, SR-IOV also brings additional design challenges arising from lacking support of locality-aware communication and virtual machine migration. This tutorial will first present an efficient approach to build HPC clouds based on MVAPICH2 over SR-IOV enabled HPC clusters. High-performance designs of virtual machine (KVM) and container (Docker, Singularity) aware MVAPICH2 library (called MVAPICH2-Virt) will be introduced. This tutorial will also present a high-performance virtual machine migration framework for MPI applications on SR-IOV enabled InfiniBand clouds. The second part of the tutorial will present advanced designs with cloud resource managers such as OpenStack and SLURM to make users easier to deploy and run their applications with the MVAPICH2 library on HPC clouds. A demo will be provided to guide the usage of MVAPICH2-Virt library.

Bio

Dr. Xiaoyi Lu is a Research Scientist of the Department of Computer Science and Engineering at the Ohio State University, USA. His current research interests include high performance interconnects and protocols, Big Data, Hadoop/Spark/Memcached Ecosystem, Parallel Computing Models (MPI/PGAS), Virtualization, Cloud Computing, and Deep Learning. He has published over 80 papers in International journals and conferences related to these research areas. He has been actively involved in various professional activities (PC Co-Chair, PC Member, Reviewer, Session Chair) in academic journals and conferences. Recently, Dr. Lu is leading the research and development of RDMA-based accelerations for Apache Hadoop, Spark, HBase, and Memcached, and OSU HiBD micro-benchmarks, which are publicly available from (http://hibd.cse.ohio-state.edu). These libraries are currently being used by more than 240 organizations from 30 countries. More than 22,550 downloads of these libraries have taken place from the project site. He is a core member of the MVAPICH2 (High Performance MPI over InfiniBand, iWARP and RoCE) project and he is leading the research and development of MVAPICH2-Virt (high-performance and scalable MPI for hypervisor and container based HPC cloud). He is a member of IEEE and ACM. More details about Dr. Lu are available at http://web.cse.ohio-state.edu/~lu.932/.

3:00 - 3:30

Break

Abstract

The tutorial will start with an overview of the MVAPICH2 libraries and their features. Next, we will focus on installation guidelines, runtime optimizations and tuning flexibility in-depth. An overview of configuration and debugging support in MVAPICH2 libraries will be presented. Support for GPUs and MIC enabled systems will be presented. The impact on performance of the various features and optimization techniques will be discussed in an integrated fashion. Best Practices for a set of common applications will be presented. A set of case studies related to application redesign will be presented to take advantage of hybrid MPI+PGAS programming models. Finally, the use of MVAPICH2-EA libraries will be explained.

Bio

Dr. Hari Subramoni is a research scientist in the Department of Computer Science and Engineering at the Ohio State University. His current research interests include high performance interconnects and protocols, parallel computer architecture, network-based computing, exascale computing, network topology aware computing, QoS, power-aware LAN-WAN communication, fault tolerance, virtualization, big data and cloud computing. He has published over 50 papers in international journals and conferences related to these research areas. He has been actively involved in various professional activities in academic journals and conferences. He is a member of IEEE. More details about Dr. Subramoni is available at http://www.cse.ohio-state.edu/~subramon.