MUG'16

Conference Location: Ohio Supercomputer Center Bale Theater

MUG'16 meeting attendees gather for a group photo.

Monday, August 15

7:45 - 9:00

Registration and Continental Breakfast

Abstract

High performance computing has begun scaling beyond Petaflop performance towards the Exaflop mark. One of the major concerns throughout the development toward such performance capability is scalability - at the component level, system level, middleware and the application level. A Co-Design approach between the development of the software libraries and the underlying hardware can help to overcome those scalability issues and to enable a more efficient design approach towards the Exascale goal. In the tutorial session we will review latest development areas within the Co-Design architecture:

- SHArP Technology

- Hardware tag matching (UCX)

- Out-of-order RDMA /adaptive routing (UCX)

- Improved atomic performance with MEMIC (OpenSHMEM)

- Dynamically Connected Transport (OpenSHMEM)

Bio

Devendar Bureddy is a Staff Engineer at Mellanox Technologies. At Mellanox, Devendar was instrumental in building several key technologies like SHArP, HCOLL ..etc. Previously, he was a software developer at The Ohio State University in network-Based Computing Laboratory lead by Dr. D. K. Panda. At Nowlab, Devendar involved in the design and development of MVAPICH2, an open-source high-performance implementation of MPI over InfiniBand and 10GigE/iWARP. He had received his Master's degree in Computer Science and Engineering from the Indian Institute of Technology, Kanpur. His research interests include high speed interconnects, parallel programming models and HPC software.

10:30 - 11:00

Break

Abstract

High performance computing has begun scaling beyond Petaflop performance towards the Exaflop mark. One of the major concerns throughout the development toward such performance capability is scalability - at the component level, system level, middleware and the application level. A Co-Design approach between the development of the software libraries and the underlying hardware can help to overcome those scalability issues and to enable a more efficient design approach towards the Exascale goal. In the tutorial session we will review latest development areas within the Co-Design architecture:

- SHArP Technology

- Hardware tag matching (UCX)

- Out-of-order RDMA /adaptive routing (UCX)

- Improved atomic performance with MEMIC (OpenSHMEM)

- Dynamically Connected Transport (OpenSHMEM)

Bio

Devendar Bureddy is a Staff Engineer at Mellanox Technologies. At Mellanox, Devendar was instrumental in building several key technologies like SHArP, HCOLL ..etc. Previously, he was a software developer at The Ohio State University in network-Based Computing Laboratory lead by Dr. D. K. Panda. At Nowlab, Devendar involved in the design and development of MVAPICH2, an open-source high-performance implementation of MPI over InfiniBand and 10GigE/iWARP. He had received his Master's degree in Computer Science and Engineering from the Indian Institute of Technology, Kanpur. His research interests include high speed interconnects, parallel programming models and HPC software.

Abstract

Knights Landing is the next generation Intel Xeon Phi processor that has many trailblazing features, such as self-boot, improved performance, integrated memory on package and integrated OmniPath communication fabric. The first generation Intel OmniPath fabric delivers 100 Gb/s of bandwidth per port and very low latency. In this tutorial, we delve into technical details of the design elements of both the Intel Xeon Phi processor and Intel OmniPath fabric with a focus on how software can take advantage of these features.

Bio

Ravindra Babu Ganapathi (Ravi) is the Technical Lead/Engineering Manager responsible for technical leadership, vision and execution of Intel OmniPath Libraries. In the past, Ravi was the lead developer and architect for Intel Xeon Phi offload compiler runtime libraries (MYO, COI) and also made key contributions across Intel Xeon PHI software stack including first generation Linux driver development. Before Intel, Ravi was lead developer implementing high performance libraries for image and signal processing tuned for x86 architecture. Ravi received his MS in Computer Science from Columbia University, NY.

Sayantan Sur is a Software Engineer at Intel Corp, in Hillsboro, Oregon. His work involves High Performance computing, specializing in scalable interconnection fabrics and Message passing software (MPI). Before joining Intel Corp, Dr. Sur was a Research Scientist at the Department of Computer Science and Engineering at The Ohio State University. In the past, he has held a post-doctoral position at IBM T. J. Watson Research Center, NY. He has published more than 20 papers in major conferences and journals related to these research areas. Dr. Sur received his Ph.D. degree from The Ohio State University in 2007.

12:00 - 1:00

Lunch

Abstract

Knights Landing is the next generation Intel Xeon Phi processor that has many trailblazing features, such as self-boot, improved performance, integrated memory on package and integrated OmniPath communication fabric. The first generation Intel OmniPath fabric delivers 100 Gb/s of bandwidth per port and very low latency. In this tutorial, we delve into technical details of the design elements of both the Intel Xeon Phi processor and Intel OmniPath fabric with a focus on how software can take advantage of these features.

Bio

Ravindra Babu Ganapathi (Ravi) is the Technical Lead/Engineering Manager responsible for technical leadership, vision and execution of Intel OmniPath Libraries. In the past, Ravi was the lead developer and architect for Intel Xeon Phi offload compiler runtime libraries (MYO, COI) and also made key contributions across Intel Xeon PHI software stack including first generation Linux driver development. Before Intel, Ravi was lead developer implementing high performance libraries for image and signal processing tuned for x86 architecture. Ravi received his MS in Computer Science from Columbia University, NY.

Sayantan Sur is a Software Engineer at Intel Corp, in Hillsboro, Oregon. His work involves High Performance computing, specializing in scalable interconnection fabrics and Message passing software (MPI). Before joining Intel Corp, Dr. Sur was a Research Scientist at the Department of Computer Science and Engineering at The Ohio State University. In the past, he has held a post-doctoral position at IBM T. J. Watson Research Center, NY. He has published more than 20 papers in major conferences and journals related to these research areas. Dr. Sur received his Ph.D. degree from The Ohio State University in 2007.

Abstract

NVIDIA GPUs have become ubiquitous on HPC clusters and the de facto platform for the emerging field of machine learning. Efficiently feeding data into and streaming results out of the GPUs is critical in both fields. GPUDirect is a family of technologies that tackle this problem by allowing peer GPUs to directly interact both among themselves and with 3rd party devices, like network adapters. GPUDirect P2P and RDMA have both gotten a boost recently with the Pascal architecture, high-speed data paths between GPUs over NVLink and improved RDMA performance. Complementarily, GPUDirect Async now allows for direct mutual synchronization among GPUs and network adapters. This tutorial gives an overview of GPUDirect technologies and goes into detail about GPUDirect Async.

Bio

Davide Rossetti is lead engineer for GPUDirect at NVIDIA. Before he spent more than 15 years at INFN (Italian National Institute for Nuclear Physics) as researcher; while there he has been member of the APE experiment, participating to the design and development of two generations of APE super-computers, and main architect of two FPGA-based cluster interconnects, APEnet and APEnet+. His research and development activities are in the fields of design and development of parallel computing architectures and high-speed networking interconnects optimized for numerical simulations, in particular for Lattice Quantum Chromodynamics (LQCD) simulations, while his interests spans different areas such as HPC, computer graphics, operating systems, I/O technologies, GPGPUs, embedded systems, digital design and real-time systems. He has a Magna Cum Laude Laurea in Theoretical Physics (roughly equivalent to a Master degree) and published more than 50 papers.

Sreeram Potluri is a Senior Software Engineer at NVIDIA. He works on designing technologies that enable high performance and scalable communication on clusters with NVIDIA GPUs. His research interests include high-performance interconnects, heterogeneous architectures, parallel programming models and high-end computing applications. He received his PhD in Computer Science and Engineering from The Ohio State University. He has published over 30 papers in major peer-reviewed journals and international conferences.

3:00 - 3:30

Break

Abstract

NVIDIA GPUs have become ubiquitous on HPC clusters and the de facto platform for the emerging field of machine learning. Efficiently feeding data into and streaming results out of the GPUs is critical in both fields. GPUDirect is a family of technologies that tackle this problem by allowing peer GPUs to directly interact both among themselves and with 3rd party devices, like network adapters. GPUDirect P2P and RDMA have both gotten a boost recently with the Pascal architecture, high-speed data paths between GPUs over NVLink and improved RDMA performance. Complementarily, GPUDirect Async now allows for direct mutual synchronization among GPUs and network adapters. This tutorial gives an overview of GPUDirect technologies and goes into detail about GPUDirect Async.

Bio

Davide Rossetti is lead engineer for GPUDirect at NVIDIA. Before he spent more than 15 years at INFN (Italian National Institute for Nuclear Physics) as researcher; while there he has been member of the APE experiment, participating to the design and development of two generations of APE super-computers, and main architect of two FPGA-based cluster interconnects, APEnet and APEnet+. His research and development activities are in the fields of design and development of parallel computing architectures and high-speed networking interconnects optimized for numerical simulations, in particular for Lattice Quantum Chromodynamics (LQCD) simulations, while his interests spans different areas such as HPC, computer graphics, operating systems, I/O technologies, GPGPUs, embedded systems, digital design and real-time systems. He has a Magna Cum Laude Laurea in Theoretical Physics (roughly equivalent to a Master degree) and published more than 50 papers.

Sreeram Potluri is a Senior Software Engineer at NVIDIA. He works on designing technologies that enable high performance and scalable communication on clusters with NVIDIA GPUs. His research interests include high-performance interconnects, heterogeneous architectures, parallel programming models and high-end computing applications. He received his PhD in Computer Science and Engineering from The Ohio State University. He has published over 30 papers in major peer-reviewed journals and international conferences.

6:00 - 9:30

Reception Dinner at Miller's Columbus Ale House

1201 Olentangy River Rd

Columbus, OH 43212

Tuesday, August 16

7:45 - 8:15

Registration and Continental Breakfast

8:15 - 8:30

Opening Remarks

Dr. David Hudak, Interim Executive Director, Ohio Supercomputer Center

Dr. Sushil Prasad, Program Director, National Science Foundation

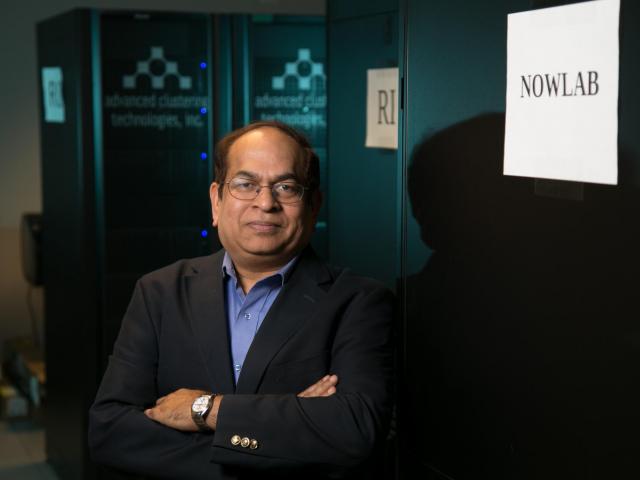

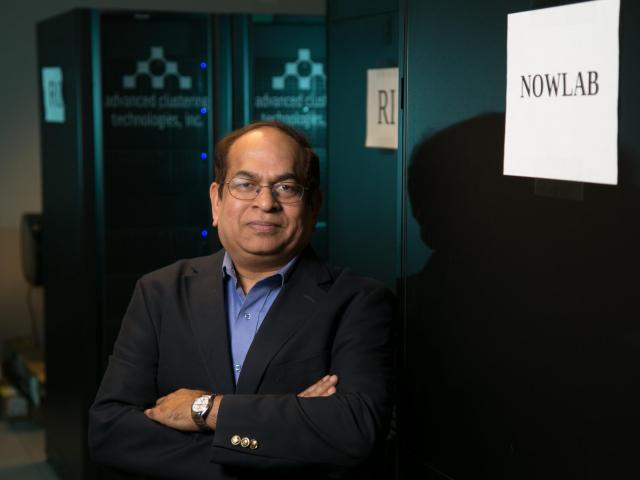

Dr. Dhabaleswar K (DK) Panda, Professor, The Ohio State University

Abstract

Implementation of exascale computing will be different in that application performance is supposed to play a central role in determining the system performance, rather than just considering floating point performance of the high-performance Linpack benchmark. This immediately raises the question as to what the yardstick will be, by which we measure progress towards exascale computing. Furthermore, if we simply settle on improving application performance by a certain factor (e.g. 100 compared to today), what is the baseline from which we start. Based on recent work at CSCS, where we have deployed a GPU cluster to run operational weather forecasting, I will demonstrate that this baseline can differ by up to a factor 10, depending on implementation choices of applications on today’s, petascale computing architectures. I will discuss what type of performance improvements will be needed to reach kilometer-scale global climate and weather simulations. This challenge will probably require more than exascale performance.

Bio

Thomas Schulthess is the director of the Swiss National Supercomputing Centre (CSCS) at Manno. He studied physics and earned his Ph.D. degree at ETH Zurich. As CSCS director, he will also be professor of computational physics at ETH.. He worked for twelve years at the Oak Ridge National Laboratory (ORNL) in Tennessee, a leading supercomputing and research in the US. Since 2002, he led “Computational Materials Science Group” with 30 co-workers.

Thomas Schulthess studied physics at ETH Zurich and earned his doctorate in 1994 with a thesis on metal alloys based on experimental data and supercomputing simulations. He subsequently continued his research activity in the US and published around seventy research papers in the best journals of his field. His present research interests are in the focused on the magnetic properties of metallic nano-particles (nano-magnetism). Using high-performance computing, he is studying the magnetic structures of metal alloys. Of particular interest are his studies on the giant magnetoresistance. He is also a two-time winner of the Gordon Bell Award.

Abstract

This talk will provide an overview of the MVAPICH project (past, present and future). Future roadmap and features for upcoming releases of the MVAPICH2 software family (including MVAPICH2-X, MVAPICH2-GDR, MVAPICH2-Virt, MVAPICH2-EA and MVAPICH2-MIC) will be presented. Current status and future plans for OSU INAM, OEMT and OMB will also be presente.d

Bio

DK Panda is a Professor and University Distinguished Scholar of Computer Science and Engineering at the Ohio State University. He has published over 400 papers in the area of high-end computing and networking. The MVAPICH2 (High Performance MPI and PGAS over InfiniBand, iWARP and RoCE) libraries, designed and developed by his research group (http://mvapich.cse.ohio-state.edu), are currently being used by more than 2,625 organizations worldwide (in 81 countries). More than 383,000 downloads of this software have taken place from the project's site. This software is empowering several InfiniBand clusters (including the 12th, 15th and 31st ranked ones) in the TOP500 list. The RDMA packages for Apache Spark, Apache Hadoop and Memcached together with OSU HiBD benchmarks from his group (http://hibd.cse.ohio-state.edu) are also publicly available. These libraries are currently being used by more than 185 organizations in 20 countries. More than 17,500 downloads of these libraries have taken place. He is an IEEE Fellow. More details about Prof. Panda are available at http://www.cse.ohio-state.edu/~panda.

Abstract

For the past three years, MPI startup performance has provided a point of discussion at every MUG. This talk guarantees that it will be again, but perhaps for the last time (though probably not). I will discuss recent work in MVAPICH to provide fast, scalable startup. It turns out that bootstrapping MPI is more interesting than you might realize. With MVAPICH having solved this, now what will we all talk about? I'll propose some ideas for that, too.

Bio

Adam is a member of the Development Environment Group within Livermore Computing. His background is in MPI development, collective algorithms, networking, and parallel I/O. He served as an author of the MPI 3.0 standard, and he is responsible for supporting MPI on the Livermore's Linux clusters. He works with a team to develop the Scalable Checkpoint / Restart (SCR) library, which uses MPI and distributed storage to reduce checkpoint / restart costs. He also guides development of the mpiFileUtils project, which provides a suite of MPI-based tools to manage large data sets on parallel file systems. He serves as the Livermore lead for the CORAL burst buffer working group. His recent work includes applying MPI and fast storage to deep learning, as well as investigation of non POSIX-compliant parallel file systems.

10:30 - 11:00

Break

Abstract

Hardware virtualization has been gaining a significant share of computing time in the last years. Using virtual machines (VMs) for parallel computing is an attractive option for many users. A VM gives users a freedom of choosing an operating system, software stack and security policies, leaving the physical hardware, OS management, and billing to physical cluster administrators. The well-known solutions for cloud computing, both commercial (Amazon Cloud, Google Cloud, Yahoo Cloud, etc.) and open-source (OpenStack, Eucalyptus) provide platforms for running a single VM or a group of VMs. With all the benefits, there are also some drawbacks, which include reduced performance when running code inside of a VM, increased complexity of cluster management, as well as the need to learn new tools and protocols to manage the clusters.

At SDSC, we have created a novel framework and infrastructure by providing virtual HPC clusters to projects using the NSF sponsored Comet supercomputer. Managing virtual clusters on Comet is similar to managing a bare-metal cluster in terms of processes and tools that are employed. This is beneficial because such processes and tools are familiar to cluster administrators. Unlike platforms like AWS, Comet’s virtualization capability supports installing VMs from ISOs (i.e., a CD-ROM or DVD image) or via an isolated management VLAN (PXE). At the same time, we’re helping projects take advantage of VMs by providing an enhanced client tool for interaction with our management system called Cloudmesh client. Cloudmesh client can also be used to manage virtual machines on OpenStack, AWS, and Azure.

Bio

Rick Wagner is the High Performance Computing Systems Manager at the San Diego Supercomputer Center, and a Ph.D. Candidate in Physics at the University of California, San Diego focusing his research on analyzing simulations of supersonic turbulence. In his managerial role, Rick has technical and operational responsibility for two of the NSF-funded Extreme Science and Engineering Discovery Environment (XSEDE) HPC clusters, Trestles and Gordon, and SDSC's Data Oasis parallel file systems. He has also worked with Argonne National Laboratory on coupling remote large-scale visualization resources to tiled display walls over dynamic circuits networks on the Department of Energy's Energy Sciences Network. Rick's other interests include promoting the sharing of astrophysical simulations through standardized metadata descriptions and access protocols, and he is currently serving as the Vice-Chair of the Theory Interest Group of the International Virtual Astronomical Observatory. His latest side project involves working with undergraduates to develop course materials on parallel programming for middle and high school students using Raspberry Pis.

Abstract

MPI applications may suffer from process and node failures, two of the most common classes of failures in HPC systems. On the road to exascale computing failure rates are expected to increase, thus providing programing models to allow applications to recover efficiently from these failures and with few application changes is desired. In this talk, I will describe Reinit, a fault-tolerance programing model that allows MPI applications to achieve faster failure recovery and that is easy to integrate in large applications. I will describe a prototype of the model that is being developed in MVAPICH2 and the Slurm resource manager, in addition to initial performance results.

Bio

Ignacio Laguna earned the M.Sc. and Ph.D. degrees in Computer Engineering from Purdue University, West Lafayette, Indiana, in 2008 and 2012 respectively. He is currently a Computer Scientist at the Center for Applied Scientific Computing (CASC) at the Lawrence Livermore National Laboratory. His research work focuses on the development of tools and techniques to improve the reliability of HPC applications, including debugging tools, fault-tolerance and resilience techniques, and compiler-based application analysis. In 2011 he received the ACM and IEEE George Michael Memorial Fellowship for his Ph.D. work on large-scale failure diagnosis techniques.

12:00 - 1:00

Lunch

Abstract

Despite GPU-accelerated hybrid machines having been standard in HPC for years, a tension can still be felt. The CPU is snappy but slow, the GPU is powerful but relatively higher-latency, the NIC is low-latency but hard to tame. In this talk, I will briefly substantiate those statements and discuss our attempts at improving the situation.

Bio

Davide Rossetti is lead engineer for GPUDirect at NVIDIA. Before he spent more than 15 years at INFN (Italian National Institute for Nuclear Physics) as researcher; while there he has been member of the APE experiment, participating to the design and development of two generations of APE super-computers, and main architect of two FPGA-based cluster interconnects, APEnet and APEnet+. His research and development activities are in the fields of design and development of parallel computing architectures and high-speed networking interconnects optimized for numerical simulations, in particular for Lattice Quantum Chromodynamics (LQCD) simulations, while his interests spans different areas such as HPC, computer graphics, operating systems, I/O technologies, GPGPUs, embedded systems, digital design and real-time systems. He has a Magna Cum Laude Laurea in Theoretical Physics (roughly equivalent to a Master degree) and published more than 50 papers.

Abstract

I will discuss the challenges and opportunities in parallelizing Molecular Dynamics simulations on multiple GPUs. The goal is to routinely perform simulations with 100,000s and up to several millions of particles on multiple GPUs. We have previously shown how to obtain strong scaling on 1000's of GPUs and how to optimze for GPU-RDMA support. In this talk, I will focus on implementing advanced Molecular Dynamics features with MPI on GPUs, namely a domain decomposition for the Fast Fourier Transform that is used in the calculation of the Coulomb force, a non-iterative version of pairwise distance constraints, and rigid body constraints. These are available in a recently released version of HOOMD-blue 2.0, a general purpose particle simulation toolkit and python package, implemented in C/C++ and CUDA. The new features facilitate, in particular, simulations of biomolecules with coarse-grained force fields.

Bio

After graduating from Leipzig University, Germany in 2011 with a PhD in Theoretical Physics, Jens Glaser spent two years as a Postdoc at the University of Minnesota, demonstrating the universality of block copolymer melts using computer simulations. He is currently a Postdoc at the University of Michigan in the Department of Chemical Engineering, and is working on problems of protein crystallization, depletion interactions and high-performance particle-based simulation codes.

Abstract

Caffe-MPI is a parallel deep learning framework that achieves a high scalability on the GPU clusters which equip the Lustre parallel file system, InfiniBand network connection and MPI environment. A multi-level parallel method including master-slave, peer to peer, loop peer is used to achieve high scalability. The optimization methods are presented including the Lustre parallel file storage system for paralleling data reading, the heterogeneous computing and communication, and GPU RDMA with MVAPICH2. The performance of Caffe-MPI is 13 times greater than the performance of the single GPU card; and the scalability is above 80%

Bio

Dr. Shaohua Wu has rich experience on applying high performance computing technologies in different fields like the computational fluid dynamics, particle physics, astronomy, numerical linear algebra, and so on. Shaohua graduated from Tsinghua University in January 2013. After receiving his PhD degree, he joined Inspur in February 2013. His current work focuses on parallelizing and optimizing applications on different platforms such as CPU, CPU+MIC and CPU+GPU.

Abstract

Wilkes started its operational life in November 2013 and since the very beginning it has been a platform for development and testing GPU Direct over RDMA (GDR) at scale. During the past years HPCS engaged with researchers in many country to help and support both directly and indirectly software development around GDR. The present talk briefly touches challenges in delivery a GPU service with cutting edge GDR capabilities. From an application development perspective, it is simple to find cases where GDR is particularly good, there are also other where it is "less good". In collaboration with other partners, the performance behavior of a 7-point 3D Laplacian stencil benchmark has been studied, looking at the MPI+OpenMP+CUDA programming model to exploit both CPU and GPU together as peers without diminish one in favor of the other. The talk will briefly cover our findings and lessons learnt.

Bio

Filippo leads a team of Research Software Engineers at the University of Cambridge working on several software projects across various Schools and Departments, from Bioinformatics to Engineering to Social Sciences, pursuing the mission of "Better Software for Better Research". As member of the Quantum ESPRESSO Foundation, he has responsibility of several aspects of the Quantum ESPRESSO project, from code developments to release cycles and dissemination. His main interests cover general High Performance Computing topics (especially MPI+X programming), application optimization and low-power micro-architectures. He is a GPU advocate and he promotes software modernization in HPC.

3:00 - 3:45

Break and Student Poster Session

A Smart Parallel and Hybrid Computing Platform Enabled By Machine Learning - Roohollah Amiri, Boise State

OSU-Caffe: Distributed Deep Learning for Multi-GPU Clusters - Ammar Ahmad Awan, The Ohio State University

Job Startup at Exascale: Challenges and Solutions - Sourav Chakraborty, The Ohio State University

Exploring Scientific Applications’ Characteristics to Mitigate the Impact of SDCs on HPC platforms - Chao Chen, Georgia Tech

Designing High Performance Heterogeneous Broadcast for Streaming Applications on GPU Clusters - Ching-Hsiang Chu, The Ohio State University

Test-Driving MPI_T in Performance Tool Development: MPI Advisor - Esthela Gallardo, University of Texas at El Paso

Supporting Hybrid MPI+PGAS Programming Models through Unified Communication Runtime: An MVAPICH2-X Approach - Jahanzeb Hashmi, The Ohio State University

Impact of Frequency Scaling on One Sided Remote Memory Accesses - Siddhartha Jana, University of Houston

Designing High Performance MPI RMA and PGAS Models with Modern Networking Technologies on Heterogeneous HPC Clusters - Mingzhe Li, The Ohio State University

Diagnosis of ADHD using fMRI and 3D Convolutional Neural Networks over GPU Clusters - Richard Platania, Louisiana State University

A Software Infrastructure for MPI Performance Engineering: Integrating MVAPICH and TAU via the MPI Tools Interface - Srinivasan Ramesh, University of Oregon

Automatic Generation of MPI Tool Wrappers for Fortran and C Interoperability - Soren Rasmussen, University of Oregon

Abstract

Collective communications are the centerpiece to HPC application performance at scale. The MVAPICH group has a rich history of designing optimized collective communication algorithms, and making them part of MVAPICH/MVAPICH2 releases. Over the course of time, there has been an ongoing discussion about designing collective algorithms over generalized primitives that are offloaded. The main benefit of a generalized communication primitives interface is that the MPI library developers (like the MVAPICH team) can continue to innovate in the algorithm space, whereas the fabric vendors can focus on offloading patterns of communication in parts of the fabric that make the most sense.

In this talk, I will present a proposal to extend the Open Fabrics API to introduce communication operations that can be scheduled for future execution. These schedule-able operations can then be arranged by the MPI library in a pattern so as to form an entire collective operation. Using this proposed interface along with an equally capable fabric, the MPI library can obtain full overlap of computation and communication for collective operations.

This is joint work with Jithin Jose and Charles Archer from Intel Corp.Bio

Sayantan Sur is a Software Engineer at Intel Corp, in Hillsboro, Oregon. His work involves High Performance computing, specializing in scalable interconnection fabrics and Message passing software (MPI). Before joining Intel Corp, Dr. Sur was a Research Scientist at the Department of Computer Science and Engineering at The Ohio State University. In the past, he has held a post-doctoral position at IBM T. J. Watson Research Center, NY. He has published more than 20 papers in major conferences and journals related to these research areas. Dr. Sur received his Ph.D. degree from The Ohio State University in 2007.

Abstract

After the refinement of specification of RMA interface in MPI 3.0, implementations of this interface are becoming improved so that they can be used as an efficient method for dynamic pattern of communications. As one of its application, this talk introduces our framework for runtime algorithm selection of collective communication with this interface. In this framework, to determine the necessity of re-selection of algorithm at runtime, each process invokes remote-atomic operation of RMA to notify master process that significant change of the performance of current algorithm is detected. Experimental results showed that this framework could dynamically change algorithm ofcollective communication with low overhead.

Bio

Takeshi Nanri received Ph.D degree of Computer Science in 2000 at Kyushu University, Japan. Since 2001, he has been an associate professor at Research Institute for Information Technology of Kyushu University. His major interest is the middleware for large-scale parallel computing. Now he is leading a 5.5 year project, ACE (Advanced Communication libraries for Exa) of JST CREST, Japan.

4:45 - 5:15

Open MIC Session

6:00 - 9:30

Banquet Dinner at Bravo Restaurant

1803 Olentangy River Rd

Columbus, OH 43212

Wednesday, August 17

7:45 - 8:30

Registration and Continental Breakfast

Abstract

The latest revolution in high-performance computing is the move to a co-design architecture, a collaborative effort among industry thought leaders, academia, and manufacturers to reach Exascale performance by taking a holistic system-level approach to fundamental performance improvements. Co-design architecture exploits system efficiency and optimizes performance by creating synergies between the hardware and the software, and between the different hardware elements within the data center. Co-design recognizes that the CPU has reached the limits of its scalability, and offers an intelligent network as the new "co-processor" to share the responsibility for handling and accelerating application workloads. By placing data-related algorithms on an intelligent network, we can dramatically improve the data center and applications performance.

Bio

Gilad Shainer is the vice president of marketing at Mellanox Technologies since March 2013. Previously, Mr. Shainer was Mellanox's vice president of marketing development from March 2012 to March 2013. Mr. Shainer joined Mellanox in 2001 as a design engineer and later served in senior marketing management roles between July 2005 and February 2012. Mr. Shainer holds several patents in the field of high-speed networking and contributed to the PCI-SIG PCI-X and PCIe specifications. Gilad Shainer holds a MSc degree (2001, Cum Laude) and a BSc degree (1998, Cum Laude) in Electrical Engineering from the Technion Institute of Technology in Israel.

Abstract

Today, many supercomputing sites spend considerable effort aggregating a large suite of open-source projects on top of their chosen base Linux distribution in order to provide a capable HPC environment for their users. They also frequently leverage a mix of external and in-house tools to perform software builds, provisioning, config management, software upgrades, and system diagnostics. Although the functionality is similar, the implementations across sites is often different which can lead to duplication of effort. This presentation will use the above challenges as motivation for introducing a new, open-source HPC community (OpenHPC) that is focused on providing HPC-centric package builds for a variety of common building-blocks in an effort to minimize duplication, implement integration testing to gain validation confidence, incorporate ongoing novel R&D efforts, and provide a platform to share configuration recipes from a variety of sites.

Bio

Karl W. Schulz received his Ph.D. in Aerospace Engineering from the University of Texas in 1999. After completing a one-year post-doc, he transitioned to the commercial software industry working for the CD-Adapco group as a Senior Project Engineer to develop and support engineering software in the field of computational fluid dynamics (CFD). After several years in industry, Karl returned to the University of Texas in 2003, joining the research staff at the Texas Advanced Computing Center (TACC), a leading research center for advanced computational science, engineering and technology. During his 10-year tenure at TACC, Karl was actively engaged in HPC research, scientific curriculum development and teaching, technology evaluation and integration, and strategic initiatives serving on the Center's leadership team as an Associate Director and leading TACC's HPC group and Scientific Applications group during his time at TACC. He was a Co-principal investigator on multiple Top-25 system deployments serving as application scientist and principal architect for the cluster management software and HPC environment. Karl also served as the Chief Software Architect for the PECOS Center within the Institute for Computational Engineering and Sciences, a research group focusing on the development of next-generation software to support multi-physics simulations and uncertainty quantification.

Karl joined the Technical Computing Group at Intel in January 2014 and is presently a Principal Engineer engaged in the architecture, development, and validation of HPC system software. He maintains an active role in the open-source HPC community and helped lead the initial design and release of OpenHPC, a community effort focused on integration of common build-blocks for HPC systems. He currently serves as the Project Leader for OpenHPC.

Abstract

High-performance computing systems at the embedded or extreme scales are a union of technologies and hardware subsystems, including memories, networks, processing elements, and inputs/outputs, with system software that ensure smooth interaction among components and provide a user environment to make the system productive. The recently launched Center for Advanced Technology Evaluation, dubbed CENATE, at Pacific Northwest National Laboratory is a first-of-its-kind computing proving ground. Before setting the next-generation, extreme-scale supercomputers to work solving some of the nation¹s biggest problems, CENATE¹s evaluation of early technologies to predict their overall potential and guide their designs will help hone future technology/systems, system software including MVAPICH, and applications before these high-cost machines make it to production.

CENATE uses a multitude of ³tools of the trade,² depending on the maturity of the technology under investigation. Research is being conducted in a laboratory setting that allows for measuring performance, power, reliability, and thermal effects. When actual hardware is not available for technologies early in their life cycle, modeling and simulation techniques for power, performance, and thermal modeling will be used. CENATE¹s Performance and Architecture Laboratory (PAL)‹a key technical capability‹offers a unique modeling environment for high-performance computing systems and applications. In a near-turnkey way, CENATE will evaluate both complete system solutions and individual subsystem component technologies, from pre-production boards and technologies to full nodes and systems that pave the way to larger-scale production. Elements of the software stack including MVAPICH and its associated tools are of importance in this and are being used on our testbeds. One particular system of interest brings together both InfiniBand and Optical Circuit Switching into a single fabric. It will be interesting to see how this system can leverage MVAPICH in providing flexibility in the cluster network.

Bio

Darren Kerbyson is Associate Division Director and Laboratory Fellow in High Performance Computing at the Pacific Northwest National Laboratory. Prior to this he spent 10 years at Los Alamos at the lead of the Performance and Architecture Lab (PAL). He received his BSc in Computer Systems Engineering in 1988, and PhD in Computer Science in 1993 both from the University of Warwick (UK). Between 1993 and 2001 he was a Faculty member of Computer Science at Warwick. His research interests include performance evaluation, performance and power modeling, and optimization of applications on current and future high performance systems as well as image analysis. He has published over 140 papers in these areas over the last 20 years. He is a member of the IEEE Computer Society, and the Association for Computing Machinery.

10:30 - 11:00

Break and Student Poster Session (Cont'd)

Abstract

The TAU Performance System is a powerful and highly versatile profiling and tracing tool ecosystem for performance analysis of parallel programs at all scales. Developed for almost two decades, TAU has evolved with each new generation of HPC systems and presently scales efficiently to hundreds of thousands of cores on the largest machines in the world. To meet the needs of computational scientists to evaluate and improve the performance of their applications, we present TAU's new features. This talk will describe the new instrumentation techniques to simplify the usage of performance tools including support for compiler-based instrumentation, rewriting binary files, preloading shared objects, automatic instrumentation at the source-code level, CUDA, OpenCL, and OpenACC instrumentation. It will describe how TAU interfaces with the MVAPICH MPI runtime using the MPI-T interface to gather low-level MPI statistics, controlling key MPI tunable parameters. The talk will also highlight TAU's analysis tools including its 3D Profile browser, ParaProf and cross-experiment analysis tool, PerfExplorer. http://tau.uoregon.edu

Bio

Dr. Sameer Shende has helped develop the TAU Performance System, the Program Database Toolkit (PDT), and the HPCLinux distro. His research interests include tools and techniques for performance instrumentation, measurement, analysis, runtime systems, and compiler optimizations. He serves as the Director of the Performance Research Laboratory at the University of Oregon, and as the President and Director of ParaTools, Inc., ParaTools, SAS, and ParaTools, Ltd.

Abstract

Transparent system-level checkpointing is a key component for providing fault-tolerance at the petascale level. In this talk, I will present some of the latest results involving large-scale transparent checkpointing of MPI applications using DMTCP (Distributed MultiThreaded CheckPointing) and MVAPICH2. In addition to the expected performance bottleneck involving full-memory dumps, some unexpected counter-intuitive practical issues were also discovered with computations involving as little as 8K cores. This talk will present some of those issues along with the proposed solutions.

Bio

Kapil Arya is a Distributed Systems Engineer at Mesosphere, Inc., where he works primarily on the open-source Apache Mesos project. At Mesosphere, Kapil's contributions include the design of Mesos module system, IP-per-container functionality for Mesos containers, and more recently, security features for Mesosphere's Datacenter Operating System (DC/OS). He is also a visiting computer scientist at Northeastern University where he is involved with the DMTCP checkpointing project. Previously, Kapil did his PhD in computer science at Northeastern University with Prof. Gene Cooperman, working on checkpointing and virtual machines' double-paging problem.

12:00 - 1:00

Lunch and Student Poster Session (Cont'd)

Abstract

This tutorial and hands-on session presents tools and techniques to assess the runtime tunable parameters exposed by the MVAPICH2 MPI library using the TAU Performance System. MVAPICH2 exposes MPI performance and control variables using the MPI_T interface that is now part of the MPI-3 standard. The tutorial will describe how to use TAU and MVAPICH2 for assessing the performance of the application and runtime. We present the complete workflow of performance engineering, including instrumentation, measurement (profiling and tracing, timing and PAPI hardware counters), data storage, analysis, and visualization. Emphasis is placed on how tools are used in combination for identifying performance problems and investigating optimization alternatives. Using their own notebook computers with a provided HPC Linux OVA image containing all of the necessary tools (running in a virtual machine), attendees will participate in a hands-on session that will use TAU and MVAPICH2 on a virtual machine and on remote clusters. This will prepare participants to locate and diagnose performance bottlenecks in their own parallel programs.

Bio

Dr. Sameer Shende has helped develop the TAU Performance System, the Program Database Toolkit (PDT), and the HPCLinux distro. His research interests include tools and techniques for performance instrumentation, measurement, analysis, runtime systems, and compiler optimizations. He serves as the Director of the Performance Research Laboratory at the University of Oregon, and as the President and Director of ParaTools, Inc., ParaTools, SAS, and ParaTools, Ltd.

Dr. Hari Subramoni is a research scientist in the Department of Computer Science and Engineering at the Ohio State University. His current research interests include high performance interconnects and protocols, parallel computer architecture, network-based computing, exascale computing, network topology aware computing, QoS, power-aware LAN-WAN communication, fault tolerance, virtualization, big data and cloud computing. He has published over 50 papers in international journals and conferences related to these research areas. He has been actively involved in various professional activities in academic journals and conferences. He is a member of IEEE. More details about Dr. Subramoni is available at http://www.cse.ohio-state.edu/~subramon.

Abstract

OSU INAM monitors InfiniBand clusters consisting of several thousands of nodes in real time by querying various subnet management entities in the network. It is also capable of interacting with the MVAPICH2-X software stack to gain insights into the communication pattern of the application and classify the data transferred into Point-to-Point, Collective and Remote Memory Access (RMA). OSU INAM can also remotely monitor several parameters of MPI processes such as CPU/Memory utilization, intra- and inter-node communication buffer utilization etc in conjunction with MVAPICH2-X. OSU INAM provides the flexibility to analyze and profile collected data at process-level, node-level, job-level, and network-level as specified by the user.

In this demo, we demonstrate how users can take advantage of the various features of INAM to analyze and visualize the communication happening in the network in conjunction with data obtained from the MPI library. We will, for instance, demonstrate how INAM can 1) filter the traffic flowing on a link on a per job or per process basis in conjunction with MVAPICH2-X, 2) analyze and visualize the traffic in a live or historical fashion at various user-specified granularity, and 3) identify the various entities that utilize a given network link.

Bio

Dr. Hari Subramoni is a research scientist in the Department of Computer Science and Engineering at the Ohio State University. His current research interests include high performance interconnects and protocols, parallel computer architecture, network-based computing, exascale computing, network topology aware computing, QoS, power-aware LAN-WAN communication, fault tolerance, virtualization, big data and cloud computing. He has published over 50 papers in international journals and conferences related to these research areas. He has been actively involved in various professional activities in academic journals and conferences. He is a member of IEEE. More details about Dr. Subramoni is available at http://www.cse.ohio-state.edu/~subramon.

Dr. Khaled Hamidouche is a research scientist in the Department of Computer Science and Engineering at the Ohio State University. His current research interests include parallel computer architecture, parallel programming, network-based computing, exascale computing, heterogeneous computing, power-aware, fault tolerance, virtualization, and cloud computing. He has published over 40 papers in international journals and conferences related to these research areas. He has been actively involved in various professional activities in academic journals and conferences. He is a member of IEEE. More details about Dr. Subramoni is available at http://nowlab.cse.ohio-state.edu/member/hamidouc.

3:00 - 3:30

Break

Abstract

The tutorial will start with an overview of the MVAPICH2 libraries and their features. Next, we will focus on installation guidelines, runtime optimizations and tuning flexibility in-depth. An overview of configuration and debugging support in MVAPICH2 libraries will be presented. Support for GPUs and MIC enabled systems will be presented. The impact on performance of the various features and optimization techniques will be discussed in an integrated fashion. `Best Practices' for a set of common applications will be presented. A set of case studies related to application redesign will be presented to take advantage of hybrid MPI+PGAS programming models. Finally, the use of MVAPICH2-EA and MVAPICH2-Virt libraries will be explained.

Bio

Dr. Hari Subramoni is a research scientist in the Department of Computer Science and Engineering at the Ohio State University. His current research interests include high performance interconnects and protocols, parallel computer architecture, network-based computing, exascale computing, network topology aware computing, QoS, power-aware LAN-WAN communication, fault tolerance, virtualization, big data and cloud computing. He has published over 50 papers in international journals and conferences related to these research areas. He has been actively involved in various professional activities in academic journals and conferences. He is a member of IEEE. More details about Dr. Subramoni is available at http://www.cse.ohio-state.edu/~subramon.

Dr. Khaled Hamidouche is a research scientist in the Department of Computer Science and Engineering at the Ohio State University. His current research interests include parallel computer architecture, parallel programming, network-based computing, exascale computing, heterogeneous computing, power-aware, fault tolerance, virtualization, and cloud computing. He has published over 40 papers in international journals and conferences related to these research areas. He has been actively involved in various professional activities in academic journals and conferences. He is a member of IEEE. More details about Dr. Subramoni is available at http://nowlab.cse.ohio-state.edu/member/hamidouc.